This post explains why Scala projects are difficult to maintain.

Scala is a powerful programming language that can make certain small teams hyper-productive.

Scala can also slow productivity by drowning teams in in code complexity or burning them in dependency hell.

Scala is famous for crazy, complex code – everyone knows about that risk factor already.

The rest of this post focuses on the maintenance burden, a less discussed Scala productivity drain.

This post generated lots of comment on Hackernews and Reddit. The Scala subreddit made some fair criticisms of the article, but the main points stand.

Li tweeted the post and empathizes with the difficulties of working in the Scala ecosystem.

Cross compiling Scala libs

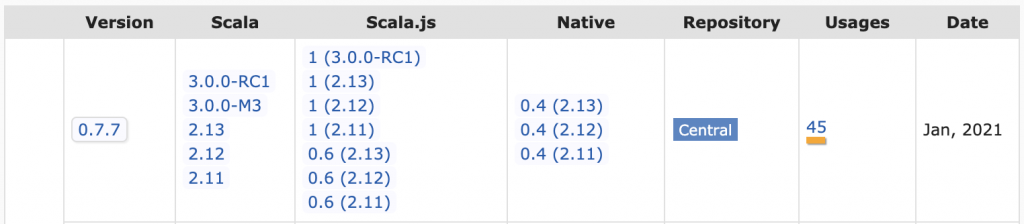

Scala libraries need to be cross compiled with different Scala versions. utest v0.7.7 publishes separate JAR files for Scala 2.11, Scala 2.12, and Scala 2.13 for example.

Scala 2.12 projects that depend on utest v0.7.7 need to grab the JAR file that’s compiled with Scala 2.12. Scala 2.10 users can’t use utest v0.7.7 because there isn’t a JAR file.

Minor versions are compatible in most languages. Python projects that are built with Python 3.6 are usable in Python 3.7 projects for example.

Scala itself is unstable

Scala doesn’t use major.minor.patch versioning as described in semver. It uses the pvp epoch.major.minor versioning.

So Scala 2.11 => Scala 2.12 is a major release. It’s not a minor release!

Scala major releases are not backwards compatible. Java goes to extreme lengths to maintain backwards compatibility, so Java code that was built with Java 8 can be run with Java 14.

That’s not the case with Scala. Scala code that was built with Scala 2.11 cannot be run with Scala 2.12.

Most of the difficulty of maintaining Scala apps stems from the frequency of major releases.

Scala 2.0 was released in March 2006 and Scala 2.13 was released in June 2019. That’s 13 major releases in 13 years!

Migrating to Scala 2.12 or Scala 2.13 can be hard. These major version bumps can be trivial for some projects and really difficult for others.

Some libs drop Scala versions too early

Some dependencies force you to use old Scala versions that other libraries might stop supporting.

The Databricks platform didn’t start supporting Scala 2.12 till Databricks Runtime 7 was released in June 2020. Compare this with the Scala release dates:

- Scala 2.11: April 2014

- Scala 2.12: November 2016

- Scala 2.13: June 2019

There was a full year where much of the Scala community had switched to Scala 2.13 and the Spark community was still stuck on Scala 2.11. That’s a big gap, especially when you think of Scala minor versions as being akin to major version differences in other languages.

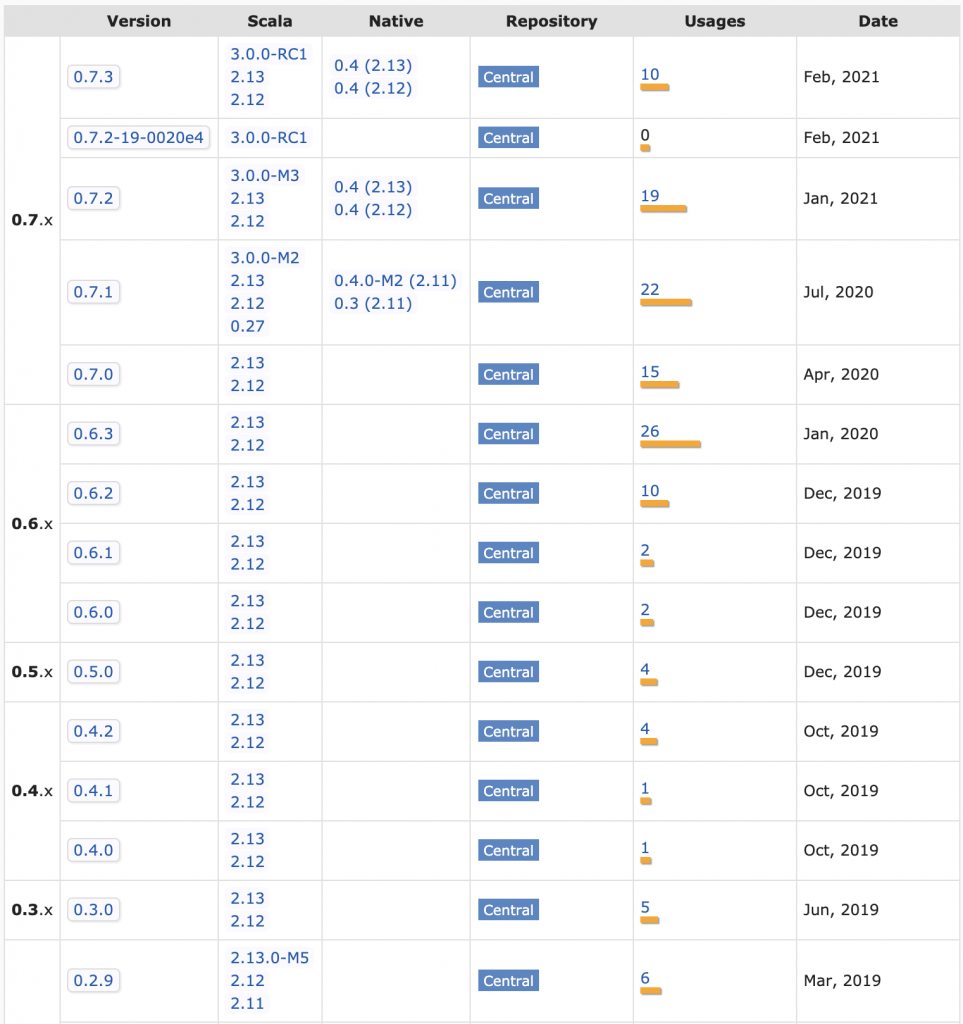

Many Scala projects dropped support for Scala 2.11 long before Spark users were able to upgrade to Scala 2.12.

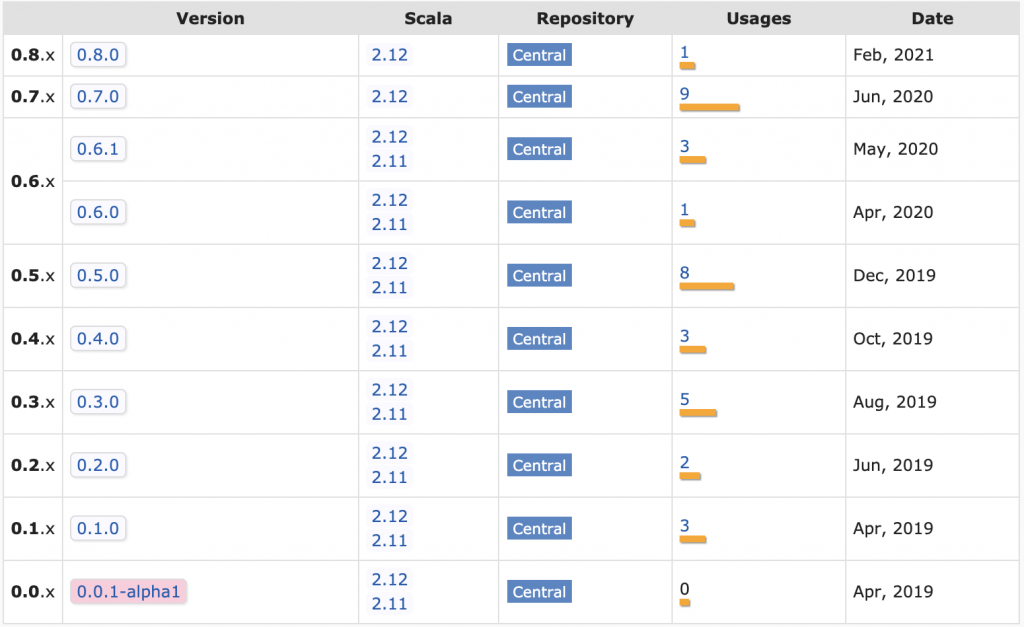

Spark devs frequently needed to search the Maven page for a project and look for the latest project for the Scala version they are using.

Abandoned libs

Open source libraries are often abandoned, especially in Scala.

Open source maintainers get tired or shift to different technology stacks. Lots of folks rage quit Scala too (another unique factor of the Scala community).

Generic libraries are usable long after they’re abandoned in many languages.

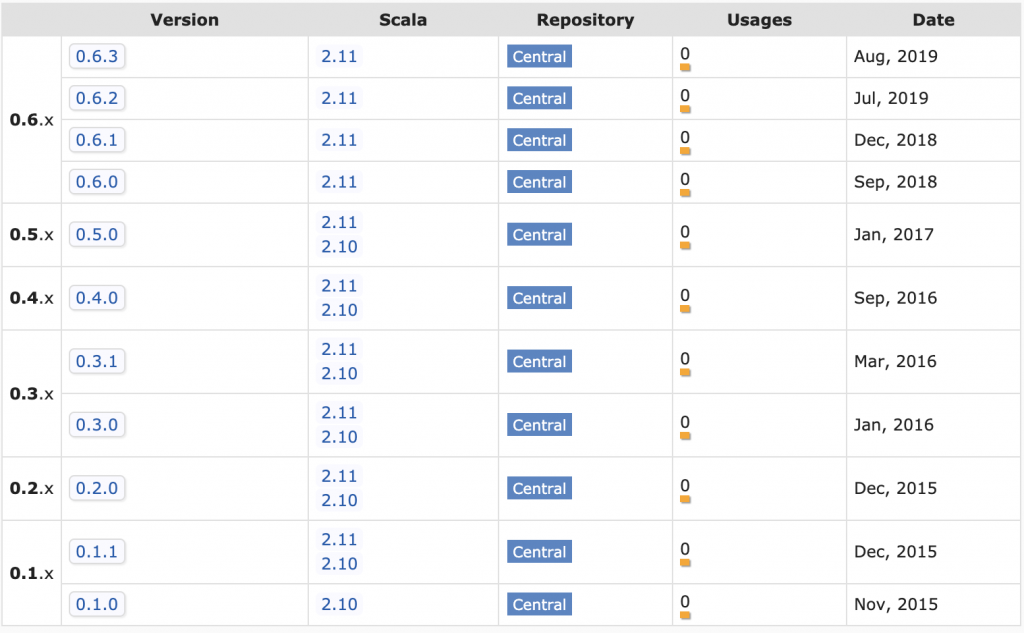

Scala open source libs aren’t usable for long after they’re abandoned. Take spark-google-spreadsheets for example.

The project isn’t maintained anymore and all the JAR files are for Scala 2.10 / Scala 2.11.

Suppose you have a Spark 2 / Scala 2.11 project that depends on spark-google-spreadsheets and would like to upgrade to Spark 3 / Scala 2.12. The spark-google-spreadsheet dependency will prevent you from doing the upgrade. You’ll need to either fork the repo, upgrade it, and publish it yourself or vendor the code in your repo.

Difficult to publish to Maven

Publishing open source project to Maven is way more difficult than most language ecosystems.

The sbt-ci-release project provides the best overview of the steps to publish a project to Maven.

You need to open a JIRA ticket to get a namespace, create GPG keys, register keys in a keyserver, and add SBT plugins just to get a manual publishing process working. It’s a lot more work than publishing to PyPI or RubyGems.

Adopting an unmaintained project and getting it published to Maven yourself is challenging. To be fair, this is equally challenging for any JVM language.

Properly publishing libs

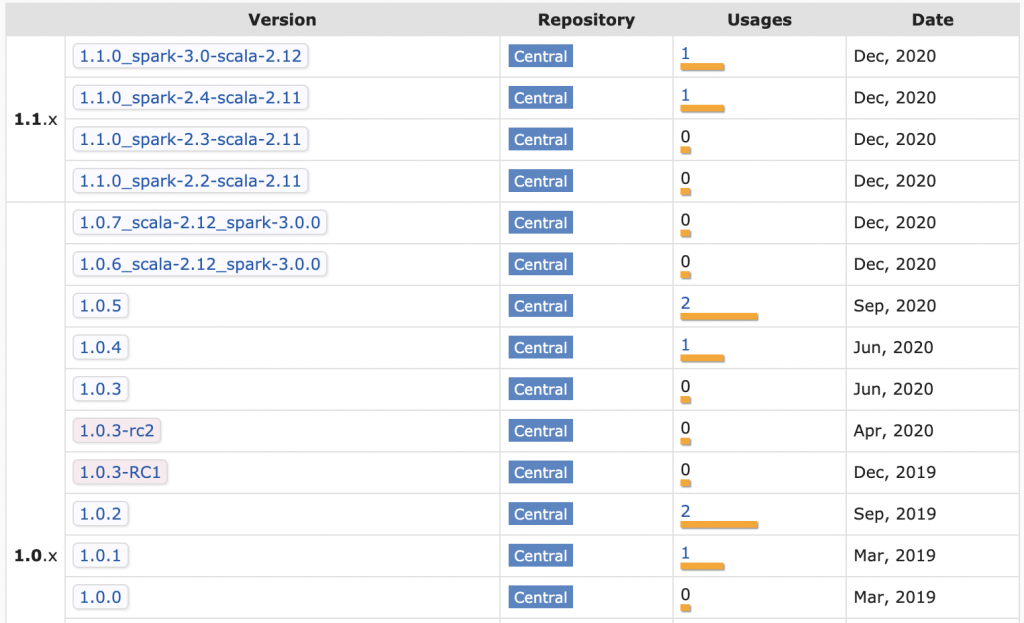

Knowing how to properly publish libraries is difficult as well. Deequ is a popular Spark library for unit testing data that’s fallen into the trap of trying to publish a variety of JAR files for different combinations of Scala / Spark versions per release.

Build matrices are difficult to maintain, especially if you want different combinations of code for different cells in the build matrix.

The Deequ matrix somehow slipped up and has Scala 2.11 dependencies associated with the Spark 3 JAR. Not trying to single out Deequ, just showing how well funded, popular projects can even get tripped up when dealing with Scala publishing complexity.

The Delta Lake project use a maintainable release process that avoids the build matrix. The README includes this disclaimer: “Starting from 0.7.0, Delta Lake is only available with Scala version 2.12”.

Take a look at the Delta Lake JAR files in Maven:

This shifts the burden of selecting the right project version to the library user.

SBT

Most Scala projects are built with SBT.

Li detailed the problems with SBT and created a new solution, but new projects are still being built with SBT.

SBT is a highly active project with more than total 10,000 commits and new features are added frequently.

Scala project maintainers need to track the SBT releases and frequently upgrade the SBT version in their projects. Most SBT releases are backwards compatible thankfully.

The Scala community should be grateful for @eed3si9n’s tireless effort on this project.

SBT plugins

The SBT plugin ecosystem isn’t as well maintained. SBT plugins are versioned and added, separate from regular library dependencies, so SBT projects have two levels of dependency hell (regular dependencies and SBT plugins).

SBT plugins can’t be avoided entirely. You need to add them to perform basic operations like building fat JAR files (sbt-assembly) or publishing JAR files to Maven ([sbt-sonatype]https://github.com/xerial/sbt-sonatype() and sbt-pgp).

tut is an example of a plugin that was deprecated and required maintenance action. At least they provided a migration guide.

It’s best to avoid SBT plugins like the plague (unless you like doing maintenance).

Breaking changes (Scalatest)

Scalatest, the most popular Scala testing framework, broke existing import statements in the 3.2 release (previous version of this article incorrectly stated that the breakage started in the 3.1 release). Users accustomed to libraries that follow semantic versioning were surprised to see their code break when performing a minor version bump.

The creator of Scalatest commented on this blog (highly recommend reading his comment) and said “My understanding of the semantic versioning spec has been that it does allow deprecation removals in a minor release”.

Semver states that “Major version MUST be incremented if any backwards incompatible changes are introduced to the public API”. Deleting public facing APIs is a backwards incompatible change.

Semver specifically warns against backwards incompatible changes like these:

Incompatible changes should not be introduced lightly to software that has a lot of dependent code. The cost that must be incurred to upgrade can be significant. Having to bump major versions to release incompatible changes means you’ll think through the impact of your changes, and evaluate the cost/benefit ratio involved.

Scalatest should have made a major version bump if they felt strongly about this change (unless they don’t follow semver).

There is an autofix for this that’s available via SBT, Mill, and Maven. I personally don’t want to install a plugin to fix import statements in my code. In any case, this supports the argument that maintaining Scala is work.

Spark and popular testing libraries like spark-testing-base depend on Scalatest core classes. spark-testing-base users won’t be able to use the latest version of Scalatest.

What should spark-testing-base do? They already have a two dimensional build matrix for different versions of Scala & Spark. Should they make a three dimensional build matrix for all possible combinations of Scala / Spark / Scalatest? spark-testing-base already has 592 artifacts in Maven.

There are no good solutions here. Stability and backwards compatibility is impossible when multiple levels of core language components are all making breaking changes.

When is Scala a suitable language

This maintenance discussion might have you thinking “why would anyone ever use Scala?”

Scala is really only appropriate for difficult problems, like building compilers, that benefit from powerful Scala programming features.

As Reynolds mentioned, Scala is a good language for Spark because Catalyst and Tungsten rely heavily on pattern matching.

Scala should be avoided for easier problems that don’t require advance programming language features.

Building relatively maintainable Scala apps

Even the most basic of Scala apps require maintenance. Upgrading minor Scala versions can cause breaking changes and SBT versions need to be bumped regularly.

Scala projects with library dependencies are harder to maintain. Make sure you depend on libraries that are actively maintained and show a pattern of providing long term support for multiple Scala versions.

Prioritize dependency free libraries over libs that’ll pull transitive dependencies into your projects. Dependency hell is painful in Scala.

Go to great lengths to avoid adding library dependencies to your projects. Take a look at the build.sbt file of one of my popular Scala libraries and see that all the dependencies are test or provided. I would rather write hundreds of lines of code than add a dependency, expecially to a library.

Don’t use advanced SBT features. Use the minimal set of features and try to avoid multi-project builds.

Use the minimal set of SBT plugins. It’s generally better to skip project features than to add a SBT plugin. I’d rather not have a microsite associated with an open source project than add a SBT plugin. I’d definitely rather not add a SBT plugin to rename Scalatest classes for major breaking changes that happened in a minor release.

The Scalatest Semantic Versioning infraction motivated me to shift project to utest and munit. Grateful for Scalatest’s contributions to the Scala community, but want to avoid the pain of multiple redundant ways of doing the same thing.

Shifting off SBT and using Mill isn’t as easy. Li’s libs seem to be the only popular ones to actually be using Mill. The rest of the community is still on SBT.

Most devs don’t want to learn another build tool, so it’ll be hard for Mill to get market share. I would build Mill projects, but think it’d hinder open source contributions cause other folks don’t want to learn another build tool.

Conclusion

Scala is a powerful programming language that can make small teams highly productive, despite the maintenance overhead. All the drawbacks I’ve mentioned in this post can be overcome. Scala is an incredibly powerful tool.

Scala can also bring out the weirdness in programmers and create codebases that are incredibly difficult to follow, independent of the maintenance cost. Some programmers are more interested in functional programming paradigms and category theory than the drudgery of generating business value for paying customers.

The full Scala nightmare is the double whammy of difficult code that’s hard to modify and a high maintenance cost. This double whammy is why Scala has a terrible reputation in many circles.

Scala can be a super power or an incredible liability that sinks an organization. At one end of the spectrum, we have Databricks, a 28 billion company that was build on Scala. At the other end of the spectrum, we have an ever growing graveyard of abandoned Scala libraries.

Only roll the dice and use Scala if your team is really good enough to outweigh the Scala maintenance burden costs.

Permalink

I hear you. It’s also a hard language to get right. The learning curve is steep. I switched to Golang recently, and I am surprisingly pleased. Now I am not saying Go is better than Scala.

Permalink

I’ve used a bit of Golang and I would feel bad having to use that language. Terribly verbose, and reminds me a lot of pre Java 5/6, and bit unreadable because of that. Certainly a lot nicer than C though, but I wouldn’t want to use it for business-applications which large code-bases.

The whole binary versioning system of Scala is indeed quite insane indeed. Fortunately, from what I read Scala 3 is backwards-compatible with Scala 2.13 (https://scalacenter.github.io/scala-3-migration-guide/docs/compatibility.html) . So we might not have to go upgrade-hell again.

Permalink

Lots of hand picked examples for what amounts to a pretty meaningless article to anyone in the Scala community, since almost none of this is a problem as you describe, but potentially decent click-bait for hackernews to attract traffic.

Semantic versioning is a choice that many projects may decide to adopt, in the Scala community or others, and it’s by no means a defect of “Scala” or its community as a whole.

The scala versions 2.10, 2.11, 2.12, should be treated as major, and Major, 2 -> 3 as a large major. this in practice doesn’t cause problems, and is an actual solution for most projects, since most codebases will gladly work as long as you use the right target, and cross compilation is actually a powerful and very practical tool, again, not a defect.

Scala also has some many benefits even compared to Java projects in terms of advanced tooling support like SBT, where code can be compiled, test, built, packaged and deployed all from within the same tool and language, not requiring XML plugins (maven) or groovy/kotlin (gradle).

As for ScalaTest, their choice of changing versions and deprecations, at least, didn’t use a good versioning scheme. Either way, Scala has numerous testing libraries and a rich and powerful ecosystem. It’s perfectly possible to keep migrating old versions of ScalaTest, but some projects may choose to use newer libraries, and that’s absolutely fine. Thanks to the strong compiler, fixing large codebases from one test approach to another may be as simple as a ScalaFix rule to recompile all your code automatically.

Scala is indeed a powertool, and Scala 3 will make even the simplest project a breeze. The fact that many people in the community reach very proficient status is a testament of the highly talented pool of developers. What we need is more people to recognize the benefits.

this post argues that is a maintenance nightmare, and yet most problems argued here, except for Spark versioning, which is a highly specific issue, are quite trivial.

By the way, Spark now compiles with Scala 2.13, which makes much more likely to match Scala 3.

Permalink

I’ve been writing almost exclusively Scala for the last 5 to 7 years and have seen many dark corners of the language. While it still remains my language of choice, the problem described by the author is very real.

Hopefully, this problem will be properly addressed by Scala 3 with its intermediate binary format called Tasty.

Permalink

Spark was used as an example, but the maintenance issues carry over to other libraries / ecosystems as well.

I’ve faced crazy dependency hell issues with Mongo as well.

This commentor also pointed out maintenance issues with the Play Framework: https://www.reddit.com/r/scala/comments/maw2wf/scala_is_a_maintenance_nightmare_mungingdata/grx4tp5?utm_source=share&utm_medium=web2x&context=3

Some folks are saying, “well you don’t have to update your Play versions”, but you do have to make upgrades if you want security patches and bugfixes.

The Reddit comment thread taught me that Scala follows the epoch.major.minor versioning scheme, so you’re right, Scala 2.11 => Scala 2.12 is a major version bump.

Don’t think that nullifies my point. How many major Scala versions have there been? More than 20?

Permalink

You wrote, “Scalatest, the most popular Scala testing framework, recently decided to completely ignore semantic versioning and make a major breaking change with a minor release.” That’s false.

You wrote, “Scalatest 3.1.0 changed class names and broke existing imports.” That’s also false.

Your wrote, “The fix for this breaking change… is a SBT plugin of course.” And that is false.

We did begin a major change in 3.1.0 so that we could modularize ScalaTest in 3.2.0, but we did it through a deprecation process. Over the history of the project, we have taken extreme care to keep user’s existing codebases working, to make upgrading easy. I tried to deprecate anything we change for one to two years, but then to keep the API clean of old cruft, we do remove it before two years has elapsed. That is when the break happens, not the version in which it is deprecated. In this case 3.2.0 came out less than a year later, but the process was the same. Every removal in 3.2.0 was deprecated first in 3.1.0.

The semantic versioning spec is vague on the distinction between binary and source compatibility. ScalaTest has basically traditionally followed Scala’s approach, which is patch releases are binary compatible, though in ScalaTest’s case, just backwards compatible (Scala maintains forward and backward binary compatibility in patch releases). Moreover, in ScalaTest we have attempted to never break source compatibility in a minor release, except removing things that had been previously deprecated. My understanding of the semantic versioning spec has been that it does allow deprecation removals in a minor release. That’s what we have always tried to do. In 3.1.0, we removed some deprecations from 3.0.8, and we started some deprecations that we intended to remove in 3.2.0. Thus all that happened in 3.1.0 was old names were deprecated and new names were added. No source code anywhere in the massive installed base of ScalaTest code should have broken upgrading from 3.0.8 to 3.1.0, so long as any 3.0.8 deprecation warnings were cleared before the upgrade.

Please correct your article. ScalaTest did not make a major breaking change in 3.1.0, and we did not ignore semantic versioning. We did not change class names in 3.1.0, we added new ones and deprecated old ones. No existing imports broke either. And we did all of that work for free.

Because the 3.1.0 deprecations involved a large number of name changes, we offered a ScalaFix tool to do the renaming for you. There is indeed an sbt plugin, but the ScalaFix tool can be used other ways. Right there on the page it says Maven and Mill plugins are also available:

https://github.com/scalatest/autofix/tree/master/3.1.x

Please correct your article where it complains that we only provided an sbt plugin. We provided a ScalaFix tool that can be run with sbt, but doesn’t have to be run with sbt. We gave that tool away for free also.

We pour a lot of time and effort and real money into ScalaTest, and we give it away for free. The least you could do, a user who used our work for free, is tell the truth about it. Please correct your article.

Thanks.

Bill

Permalink

Thanks for the comment. Updated the post, but don’t think the crux of what I was communicating was inaccurate.

* Upgrading to Scalatest 3.2 broke the code (I mistakenly said 3.1)

* 3.1 => 3.2 is a semver minor version bump. Deleting deprecated code isn’t allowed for minor version bumps if you follow semver (guess it’s fine if you follow another versioning scheme).

* There is a plugin to fix the code (I said it was SBT plugin, but there are Maven / Mill plugins too – same point)

I appreciate your work on Scalatest and your contributions to the Scala community. Sorry if my post hurt your feelings.

Permalink

I see what you’re saying now. I looked again at the semver spec and it indeed does say that any backwards incompatible change should be given a major version bump, *even after a deprecation cycle*:

“`

Deprecating existing functionality is a normal part of software development and is often required to make forward progress. When you deprecate part of your public API, you should do two things: (1) update your documentation to let users know about the change, (2) issue a new minor release with the deprecation in place. Before you completely remove the functionality in a new major release there should be at least one minor release that contains the deprecation so that users can smoothly transition to the new API.

“`

We have never done that, thus I realize now you’re right that we have not been following semver for ScalaTest. What we do differently is that after a one to two year deprecation cycle we allow our selves to remove such long deprecated features in a minor release.

I do recall making this choice originally based on how Scala itself was being evolved, not by looking at semver. Scala was binary incompatible from one minor version to the next, and only bumped the major version to indicate very major upgrade.

What we do is ensure backwards binary compatibility (tested with MIMA) for patch releases, source compatibility for patch and minor releases, however, allowing ourselves to remove in minor releases features that have been deprecated for a good long while. What we use major releases for is source-breaking changes for which we could *not* do a deprecation cycle. We’ve only done that twice in ScalaTest history, 1.x.y to 2.0.0 and 2.x.y to 3.0.0, and we kept such breakages as small and rare as possible.

This approach does mean we ask users to do a recompile on every minor release update, because those are binary incompatible (and we may have removed something long deprecated). The approach may not make as much sense for production (non-test) libraries, where a recompile might be less acceptable.

Sébastien Doeraene follows a very different approach for Scala.js. That’s described here:

http://lampwww.epfl.ch/~doeraene/presentations/binary-compat-scala-sphere-2018/#/25

He has tried to convince me to follow that approach, but we have yet to change our ways.

One thing we have been doing in ScalaTest, which I observe with a lot of software that uses major.minor.patch versioning, is that the major version number is a kind of “brand name” for the software. “Scala 2” was used like a brand name, and 2 was the major version of all those releases. “Scala 3” is now being used like a brand name, and 3 will be the major version of all its releases. We did that with ScalaTest 2 and ScalaTest 3 also, and that branding factor was a driver of us not wanting to bump the major version after removing features after a decent deprecation cycle.

Anyway, thanks for updating your post.

Permalink

It’d be great if you could “undo” the breaking Scalatest 3.2 changes and make a Scalatest 3.3 release that’s backwards compatible with Scalatest 3.1.

This would provide a good upgrade path for a lot of your users.

The Scalafix solution will not work for a lot of the big codebases that depend on your library. I don’t think Spark isn’t going to install a SBT plugin and use an autofix that changes tons of test files in the source code (and creates a rebase hell situation for all the outstanding pull requests). I will ping the Spark mailing list to see how they’ll handle this situation.

Permalink

Good article. Judging from the Scala reddit post, you hit a few elitist nerves.:)

BTW, I thought Python overtook Scala on Spark a few years back.

Permalink

Yea, some of the comments from the Scala community are really funny – love their passion for programming languages!

Yea, the PySpark API is more popular than the Scala API now for Spark. Check out this article for a detailed comparison of the two APIs if you’re interested: https://mungingdata.com/apache-spark/python-pyspark-scala-which-better/

Permalink

very good article! Yeah searching for the right version of library that has to have one particular scala version inside (e.g. scala 2.12 in

`org.apache.spark:spark-sql_2.12:jar:3.1.1 `) is pretty annoying.

Hopefully, Scala 3 will address this problem a bit

Permalink

wonderful solid/critical article without glossing over, I’m happy about it, and it also agrees with my experiences in many places.

With confidence I am looking forward to upcoming Scala projects and a big thank you to all developers made it possible.

Permalink

Thanks for the article. Most comments correspond to my own experience with Scala.

Separate thanks for mentioning alternatives to scalatest. Its loose syntax(es) will always amuse me.

Though SBT is a bit unique tool, it has very solid documentation that needs to be read. For tasks where you’d need a plugin in maven, in sbt you just solve a problem with a line of scala code.