Install PySpark, Delta Lake, and Jupyter Notebooks on Mac with conda

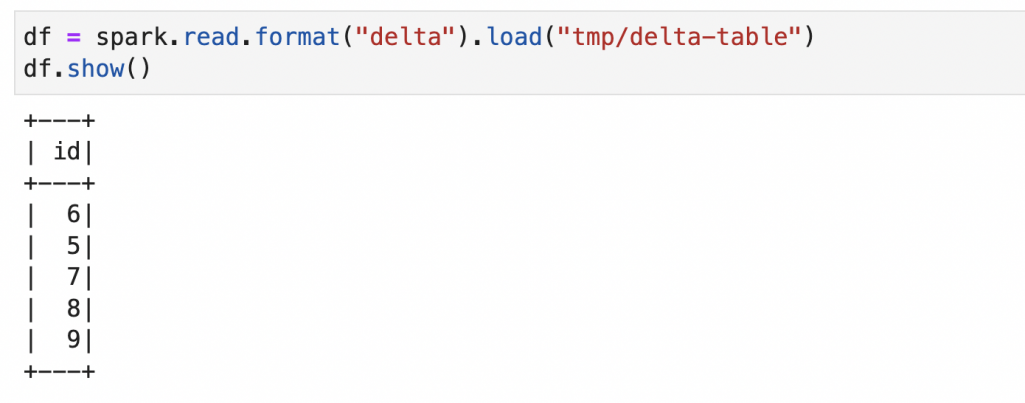

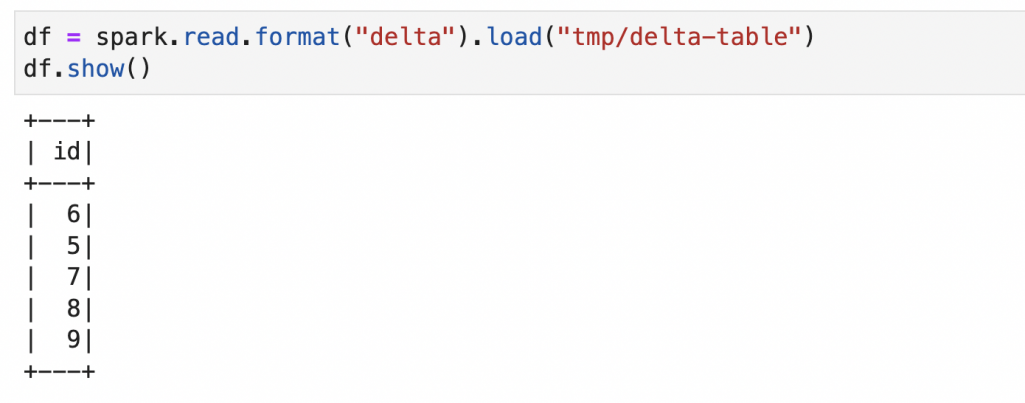

This blog post explains how to install PySpark, Delta Lake, and Jupyter Notebooks on a Mac. This setup will let you easily run Delta Lake computations on your local machine […]

PySpark

This blog post explains how to install PySpark, Delta Lake, and Jupyter Notebooks on a Mac. This setup will let you easily run Delta Lake computations on your local machine […]

This post explains how to create DataFrames with ArrayType columns and how to perform common data processing operations. Array columns are one of the most useful column types, but they’re […]

This post explains how to define PySpark schemas and when this design pattern is useful. It’ll also explain when defining schemas seems wise, but can actually be safely avoided. Schemas […]

This post explains how to add constant columns to PySpark DataFrames with lit and typedLit. You’ll see examples where these functions are useful and when these functions are invoked implicitly. […]

This blog post shows you how to gracefully handle null in PySpark and how to avoid null input errors. Mismanaging the null case is a common source of errors and […]

This post explains how to create a SparkSession with getOrCreate and how to reuse the SparkSession with getActiveSession. You need a SparkSession to read data stored in files, when manually […]

This post shows you how to select a subset of the columns in a DataFrame with select. It also shows how select can be used to add and rename columns. […]

This post explains how to filter values from a PySpark array column. It also explains how to filter DataFrames with array columns (i.e. reduce the number of rows in a […]

Multiple PySpark DataFrames can be combined into a single DataFrame with union and unionByName. union works when the columns of both DataFrames being joined are in the same order. It […]

This post shows the different ways to combine multiple PySpark arrays into a single array. These operations were difficult prior to Spark 2.4, but now there are built-in functions that […]